- #Windows typing utf codepoints software#

- #Windows typing utf codepoints code#

- #Windows typing utf codepoints windows#

Even though it provides shorter representation for English and therefore to computer languages (such as C++, HTML, XML, etc) over any other text, it is seldom less efficient than UTF-16 for commonly used character sets.

#Windows typing utf codepoints windows#

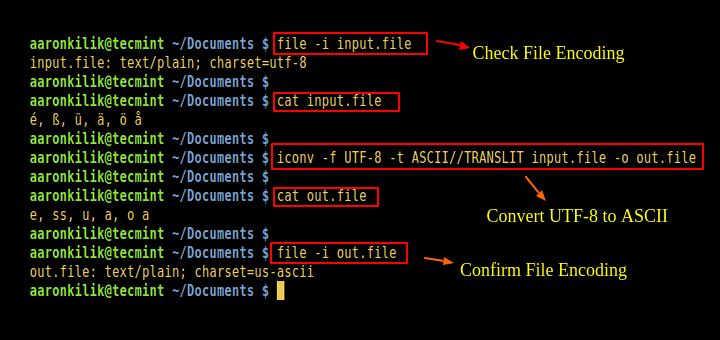

As a result of this mess, many Windows programmers are now quite confused about what is the right thing to do about text.Īt the same time, in the Linux and the Web worlds, there is a silent agreement that UTF-8 is the best encoding to use for Unicode.

#Windows typing utf codepoints code#

Windows C++ programmers are educated that Unicode must be done with âwidecharsâ (Or worseâthe compiler setting-dependent TCHARs, which allow programmer to opt-out from supporting all Unicode code points). Furthermore, since UTF-8 cannot be set as the encoding for narrow string WinAPI, one must compile his code with UNICODE define. Microsoft has often mistakenly used âUnicodeâ and âwidecharâ as synonyms for both âUCS-2â and âUTF-16â.

Currently Unicode spans over 109449 characters, about 74500 of them being CJK ideographs. This effectively nullified the rationale behind choosing 16-bit encoding in the first place, namely being a fixed-width encoding. In 1996, the UTF-16 encoding was created so existing systems would be able to work with non-16-bit characters. However, it was soon discovered that 16 bits per character will not do for Unicode. It was especially attractive for new technologies, such as the Qt framework (1992), Windows NT 3.1 (1993) and Java (1995). In the following years many systems have added support for Unicode and switched to the UCS-2 encoding. In 1991, the first version of the Unicode standard was published, with code points limited to 16 bits. At the basis of his design was the naïve assumption that 16 bits per character would suffice. Becker published the first Unicode draft proposal. Operations on encoded text strings are discussed in Section 7. Graphemes, code units, code points and other relevant Unicode terms are explained in Section 5. This is due to a particular feature of this encoding. On the other hand, seeing UTF-8 code units (bytes) as a basic unit of text seems particularly useful for many tasks, such as parsing commonly used textual data formats. We see no particular reason to favor Unicode code points over Unicode grapheme clusters, code units or perhaps even words in a language for that. The truth, however, is that Unicode is inherently more complicated and there is no universal definition of such thing as Unicode character.

#Windows typing utf codepoints software#

This lead to software design decisions such as Pythonâs string O(1) code point access. Many developers mistakenly see code points as a kind of a successor to ASCII characters. In this manifesto, we will also explain what a programmer should be doing if they do not want to dive into all complexities of Unicode and do not really care about whatâs inside the string.įurthermore, we would like to suggest that counting or otherwise iterating over Unicode code points should not be seen as a particularly important task in text processing scenarios.

For instance, a file copy utility should not be written differently to support non-English file names. We, however, believe that for an application that is not supposed to specialize in text, the infrastructure can and should make it possible for the program to be unaware of encoding issues. It is in the userâs bill of rights to mix any number of languages in any text string.Īcross the industry, many localization-related bugs have been blamed on programmersâ lack of knowledge in Unicode.

Also, we recommend forgetting forever what âANSI codepagesâ are and what they were used for. We believe that, even on this platform, the following arguments outweigh the lack of native support.

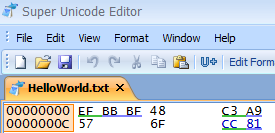

This document also recommends choosing UTF-8 for internal string representation in Windows applications, despite the fact that this standard is less popular there, both due to historical reasons and the lack of native UTF-8 support by the API. In particular, we believe that the very popular UTF-16 encoding (often mistakenly referred to as âwidecharâ or simply âUnicodeâ in the Windows world) has no place in library APIs except for specialized text processing libraries, e.g. We suggest that other encodings of Unicode (or text, in general) belong to rare edge-cases of optimization and should be avoided by mainstream users. We believe that our approach improves performance, reduces complexity of software and helps prevent many Unicode-related bugs. Our goal is to promote usage and support of the UTF-8 encoding and to convince that it should be the default choice of encoding for storing text strings in memory or on disk, for communication and all other uses. Without proper rendering support, you may see question marks, boxes, or other symbols. Manifesto Purpose of this document This document contains special characters.

0 kommentar(er)

0 kommentar(er)